Testing in the Wild: Exploring the Advantages of Serving a SaaS Product

On-premise scenarios often consist of a three-layer systems landscape, including development, testing, and production systems. In theory, this layered approach should help to keep the production system stable and consistent. However, this approach has several downsides when applied to real-world scenarios:

The development and testing systems do not reflect the hardware specifications of the production system.

Features tested on the testing systems are mainly tested in isolation.

The load on testing systems is not comparable to that of a production system.

Moreover, test cases only cover the happy path, which is already covered even by unit tests but not the combination of several functionalities or even with the whole software suite, which is often not feasible anyway. Another aspect to consider is data. The amount of data available in development and testing systems is only a fraction of the data used in production systems, and therefore the behavior is not comparable.

With cloud software, the whole software lifecycle management and the provisioning of suitable hardware often become the responsibility of software vendors and their service providers. With this comes many new opportunities that are not possible in their entirety when providing on-premise software. We came up with one idea at Celonis to test our software directly in production. As a software user, you may think: “That is not an innovation, it seems almost all software vendors are doing this.” However, our approach doesn’t interfere with the user experience or even degrade performance. We are aiming for exactly the opposite by improving our CI/CD pipeline and at the same time improving quality and avoiding regressions.

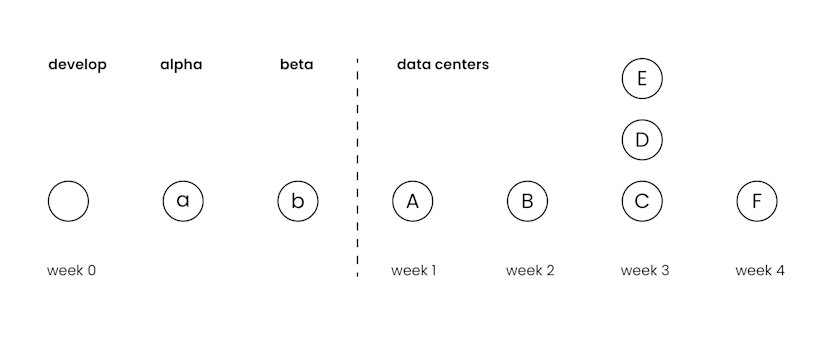

The current deployment strategy involves some manual work and takes time, but also guarantees a certain level of quality. Celonis offers its services around the globe, with data centers distributed worldwide. Instead of rolling changes globally, we decided to deploy our software in different stages: data center by data center on a weekly weekend basis. This approach gives us several advantages, ranging from a limited number of affected customers in case of errors to the minimal response time of engineers working in the same time zone.

With a growing number of data centers, the process is more involved and tends to become longer. The feature parity around the globe, expected by customers, is not given and certain features may be used in certain regions only, which delays the feedback and adds potential overhead by hotfixing all data centers along the line.

Testing in Production

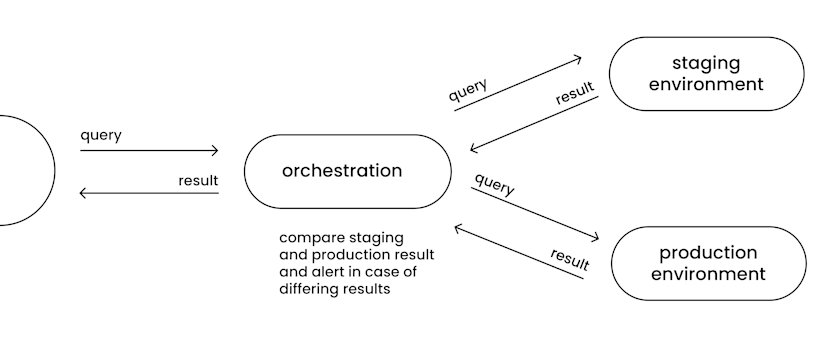

As we build an analytics database called SaolaDB, we have created a well-defined interface that we expect to result in consistent responses. Therefore, the idea is to deploy the latest developed artifact next to the production system, but of course, on its own staging environment, separated from all production usage. We call it staging, as it directly adds another stage to the deployment process in the hopes of limiting some staging layers further down the road. The advantage of an analytics database is the read-only behavior, which allows the utilization of the same data used for production. The persistence layer used for cold data, e.g. internal caches or indexes, is separated and will be filled if the use cases ask for it. If a database query arrives in the system, the orchestration layer distributes the query to both the production and the staging service. To avoid interference and performance regression, the result of the production system returns directly to the requester. The result is temporarily stored in the orchestration layer and compared in an asynchronous manner with the result of the staging system. Log messages with an according context are generated if the result of both systems differ and with the help of monitoring and alerting, respective stakeholders get directly notified.

Even with a test-driven development approach, certain errors are detected rather late in the software development cycle. This happens mainly due to tradeoffs between development velocity and the entirety of tests and data used. But even extended nightly and performance tests are missing certain aspects, as mentioned above. By testing in the production environment, we’re able to utilize real-world customer data and use cases while respecting the same privacy setup as the production system. What’s more, the artifact is tested inside the staging environment, within the production system, accounting for the according infrastructure to be tested. Setting up similar hardware for staging then for production also ensures that e.g. memory limits, as well as thread count, is comparable to the production use cases. But does it mean you have to double your cloud infrastructure? Well, you could, but we decided to pick certain use cases which consist of a lot of functionality and high frequent usage. This imposes a disadvantage as it is not easy to model the load on the staging system to be similar to the production environment. A further downside is that it only can spot regression but no bugs in new features, as there is no way to compare a non-existing functionality with new functionality. Also, fixing bugs in production could result in many false positive reports, as the results naturally differ.

Challenges and Learnings

When we came up with the idea, we thought this is straightforward, but reality proved us wrong. We identified two main challenges and key learnings:

A highly concurrent and heavily used system brings a lot of synchronization and consistency problems. The happy path is still somewhat straightforward, but there are many edge cases that should not be forgotten to avoid missing comparisons or false positives in the monitoring.

The implementation is only one aspect. The true added-value comes from a sound operating model, from selecting the hardware and workload on the staging infrastructure to integrating monitoring and alerting into the development process.

If you are interested in further results and concrete numbers that show how the staging system helps us improve our CI/CD pipeline and pushes our quality, please stay tuned.

Like what we do at Celonis? Our Product and Engineering teams are growing globally! Visit our Careers page to learn more: https://www.celonis.com/careers/jobs/